As a member of the

London Java Community, I was gratified to hear of a Java conference,

JAX London, on my own doorstep and eagerly signed up. It had been a while since I've attended a Java conference. I used to be a regular attendee of JavaOne but over the last few years, it has lost its lustre, especially now as it seems to be a bolt-on to Oracle OpenWorld. Maybe that'll change in future.

Day 1:

I eschewed the workshops on Android and JEE6/7 development in favour of talks focused on Spring technology. There were some excellent talks given on the new additions to Spring such as

Spring Data as well as the upcoming functionality in the next version of Spring. There were also some good introductions to

Spring Batch and

Spring Security. A talk was also given on the interoperability of Spring and Scala which was informative on the use of Java DI frameworks with Scala rather than using inherent Scala approaches (see

Cake pattern).

The most useful session in my eyes was the round table discussion at the end of the day in which the audience were allowed to ask questions to the Spring creators themselves. This was especially fruitful as you gain those nuggets of wisdom from these experts in their fields which you wouldn't normally get if it wasn't a vis-a-vis conversation.

Day 2:

My second day at the conference was mostly Agile focused. I attended a very good talk on the

Scrum Product Backlog by

Roman Pichler of which many salient points of advice were offered.

The next talk addressed software quality. The first talk on

Software Craftmanship by

Sandro Mancuso. This talked reinforced the idea that software development is a craft rather than purely engineering. He extolled the principles of the Software Craftsmanship movement, promoting self-improvement, knowledge share, professionalism, passion and care. I resisted the urge to jump up from the audience and shout 'Amen brother' but that was what I was thinking. The next talk titled

Slow and Dirty by Jason Gorman refuted the notion about that we should release code dirty due to unrealistic deadlines and worry about the clean up later. One of his memorable phrases was 'Anaerobic Software Development'. I'm sure a lot of developers will concur with this sentiment. This describes situations where development teams start projects at an unsustainably fast rate, causing entropy in their code to build up. This causes intense pain after a short while which results in the team having to stop for weeks, maybe months until enough detritus is removed so the project can start moving forward again. If you're lucky that is, sometimes there's so much build up (holding back on the profanity :)), that the project must be dropped entirely and a new one must be started. His guiding principle was that if you care about quality all of the time then you don't get into these situations. Next time a manager, tells you we need something quick and dirty, cut corners on quality, to get something out of door, you should be prepared to push back as much as possible. You're only making a rod for your own back and building technical debt which must be repaid later.

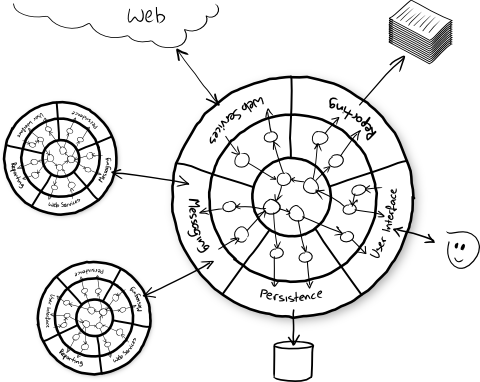

Given my interest in concurrency, the next session I attended was on message passing by Dr Russell Winder who opined (with many interesting, witty and funny anecdotes) about why the shared memory multithreading, the prevailing wisdom of currently popular languages should not be used. Instead they should be replaced by higher level constructs such as Actors, CSP and DataFlow so that issues seen in contemporary approaches are eradicated. He then gave a demonstration with Actors via the

GPars library of which he is the author. The 50 minute talk did not allow him to give a fuller treatise of the subject but it has definitely piqued my interest in that library for further investigation.

The last session of the day was giving by Martijn Verburg and Ben Evans on some of the new features of Java 7. They then delivered an open coding session on some of the features of

Project Coin. Unfortunately for me, this turned out for me to be a thought experiment as I didn't bring my laptop to the session, although I did get to ask Martijn some questions on some of the new concurrency features in Java 7.

Day 3:

In my previous blog, I opined about whether had Java had reached its peak. I may have to revise my opinion somewhat after two excellent keynotes from

James Governor of RedMonk and Simon Ritter of Oracle. The latter reinforced my view that the really interesting stuff will happen in Java 8 with respect to lambdas and modularity. It was also interesting to see Oracle's roadmap for Java in that they intend to release a new version of Java every two years. The roadmap given was up to 2019. Wonder what Java will look like then?

Fredrik Öhrström of Oracle then gave a very informative talk on some of the expected features in Java 8 specifically

lambdas, map filter reduce and interface extensions. Following the takeover of Sun by Oracle, there are now two competing JVMs i.e. JRockit and Sun Hotspot. There is now a plan is for JRockit functionality to subsumed into Hotspot VM with the non-enterprise JRockit features to be added later incrementally.

The next lively talk on Performance Tuning resulted in a well known adage,

'Measure don't guess'. The presentation centered on a mal-performing web application. By measuring the throughput and load using different tools such as JMeter, VisualVM and vmstat, they showed how to investigate and eventually find the culprit. One should never shoot in the dark when performance tuning. A scientific approach should be followed such as baselining your application before any measurements are made so as to ensure that any changes actually lead to an improvement rather than degradation.

I then attended another talk on Java 8 concurrency giving by

Martijn Verburg and Ben Evans advocating the use of Java concurrency library rather than relying on outdated constructs such as using synchronized. They also gave an on why parallelism will become more important in the upcoming years.

Changing tack for a few sessions, I then attend Ted Neward

NoSQL session. This was a talk on what NoSQL actually meant as there's a lot of ambiguity in the community on this fundamental point. He then compared some of the common NoSQL variants such as db40, Cassandra, MongoDB and the typical situations where they could be used, a point sometimes missed. Use the right tool for the right job. Ted Neward is a great communicator and the session was enlightening in all respects.

The final session of the day was given by

LMAX on their

Disruptor Pattern. This was very interesting in many ways, as it seemed to go against the grain of previous sessions on concurrency. LMAX is a financial exchange so latency throughout their system is extremely critical. Through empirical evidence they demonstrated that accepted approaches to concurrency were non-performant due to the overhead of dealing with the JVM and

JMM. This was especially interesting as being a Java programmer we're shielded from the low level details of the architecture your program is running on and assume that we need not worry about it too much as it's the JVM problem not mine. This is no longer the case. We know have to be increasingly wary of latencies between the processor, the L1, L2 caches etc and the main memory, as well as the JIT assembly code, a mechanical sympathy if you will. Their innovative solution rests on using a lock free ring buffer (the fundamental data structure at the heart of the Disruptor) rather than using the traditional work queue/thread approaches. I've not really given the Disruptor pattern the attention it deserves, as it really is a sea change in how applications could be architectured both from a business logic and data point of view. I will definitely be doing some investigation on this topic. There is a

great introduction to this given by Martin Fowler and it's also advantageous that the

Disruptor is an open source project.

Last thoughts:

Sadly though there were at least 10 other sessions I would like to have been at. I would have loved to followed Ian Robinson's session on

Neo4J as well as attended some of some of the cloud offerings (some former colleagues of mine from

Cloudsoft were presenting) but alas the timetable didn't allow me the opportunity. All in all, a feature packed 3 days which ended far too quickly. It's great to meet a lot of kindred spritis who were as passionate about technology as I am. I'm already looking forward to JAX 2012 :).